Overview

When

Consider adding a Custom Resource to Kubernetes if you want to define new controllers, application configuration objects or other declarative API. it’s mostly used for complex stateful application.

How

Custom resources can appear and disappear in a running cluster through dynamic registration, and cluster admins can update custom resources independently of the cluster itself. Once a custom resource is installed, users can create and access its objects using kubectl, just as they do for built-in resources like Pods.

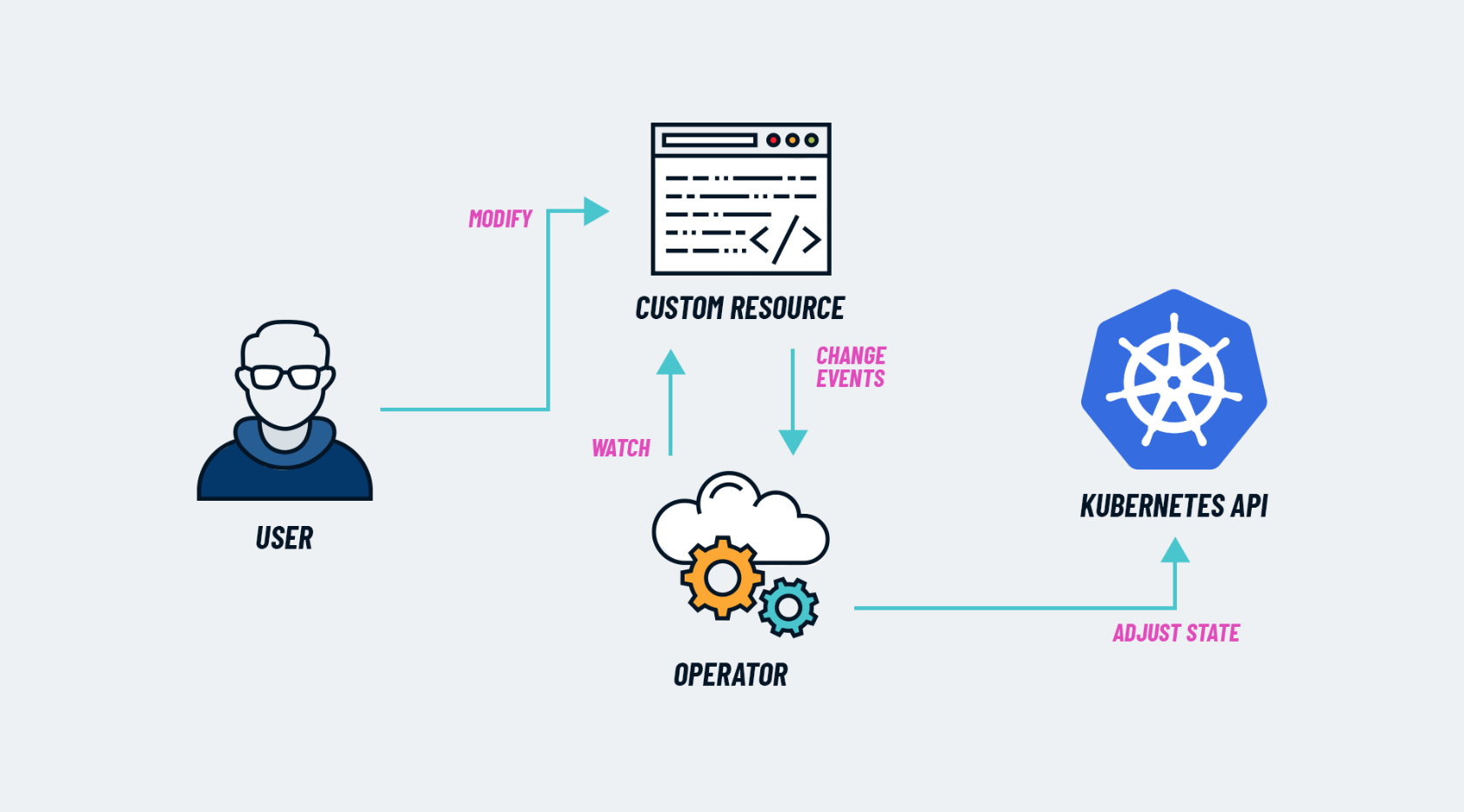

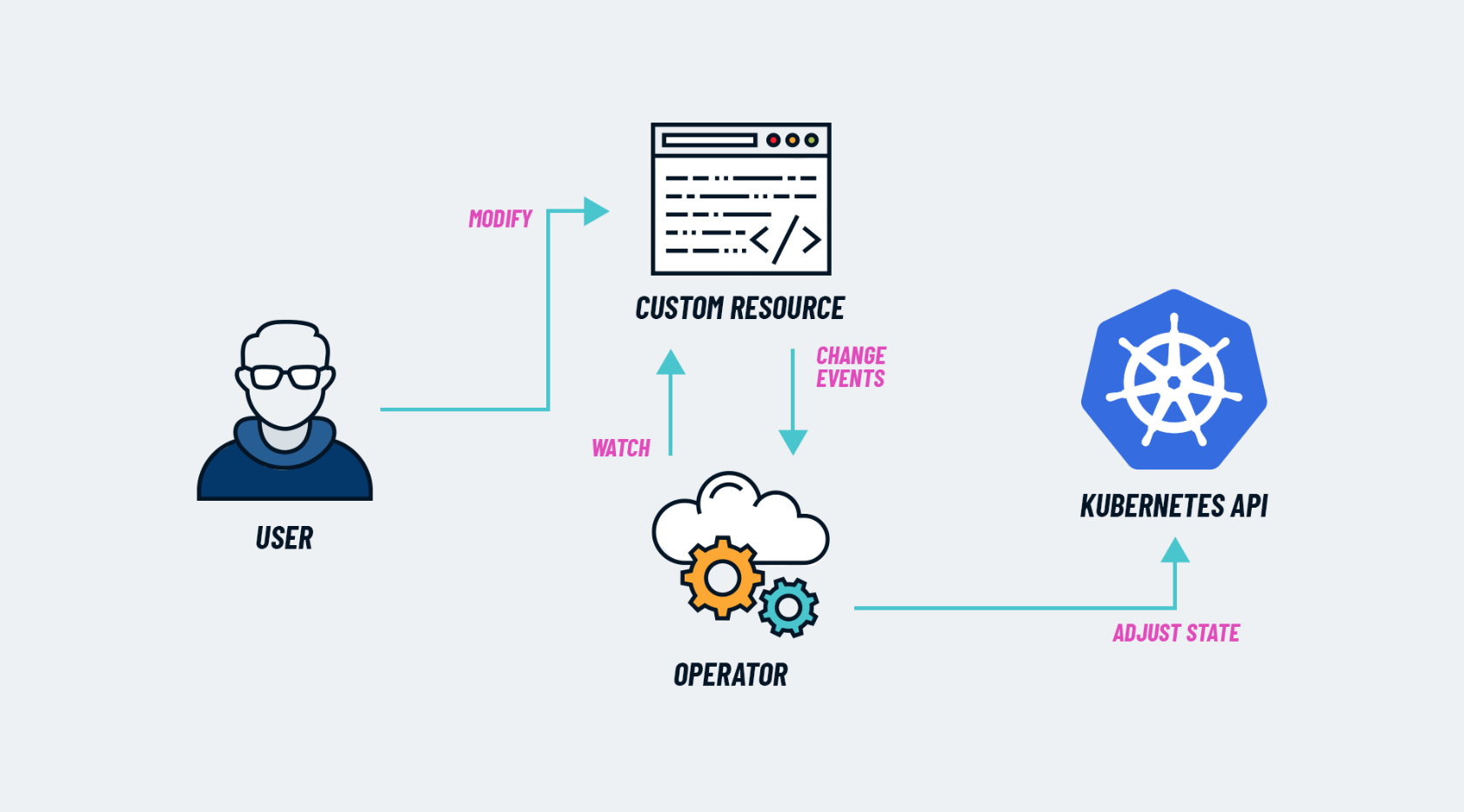

Operator pattern

The combination of a custom resource API and a control loop is called the Operator pattern, The Operator pattern is used to manage specific, usually stateful, applications.

Kubernetes provides two ways to add custom resources to your cluster:

CRDs are simple and can be created without any programming.API Aggregation requires programming, but allows more control over API behaviors like how data is stored and conversion between API versions.

CRDs are easier to use. Aggregated APIs are more flexible. Choose the method that best meets your needs.

Typically, CRDs are a good fit if:

- You have a handful of fields

- You are using the resource within your company, or as part of a small open-source project (as opposed to a commercial product)