k8s_device_plugin

Overview

Kubernetes provides a device plugin framework that you can use to advertise system hardware resources to the Kubelet which then reports resource to API server.

Instead of customizing the code for Kubernetes itself, vendors can implement a device plugin that you deploy either manually or as a DaemonSet. The targeted devices include GPUs, high-performance NICs, FPGAs, InfiniBand adapters, and other similar computing resources that may require vendor specific initialization and setup.

The workflow of the device plugin is divided into two parts:

- Resource reporting upon startup and monitoring after starts

- Scheduling and running during usage

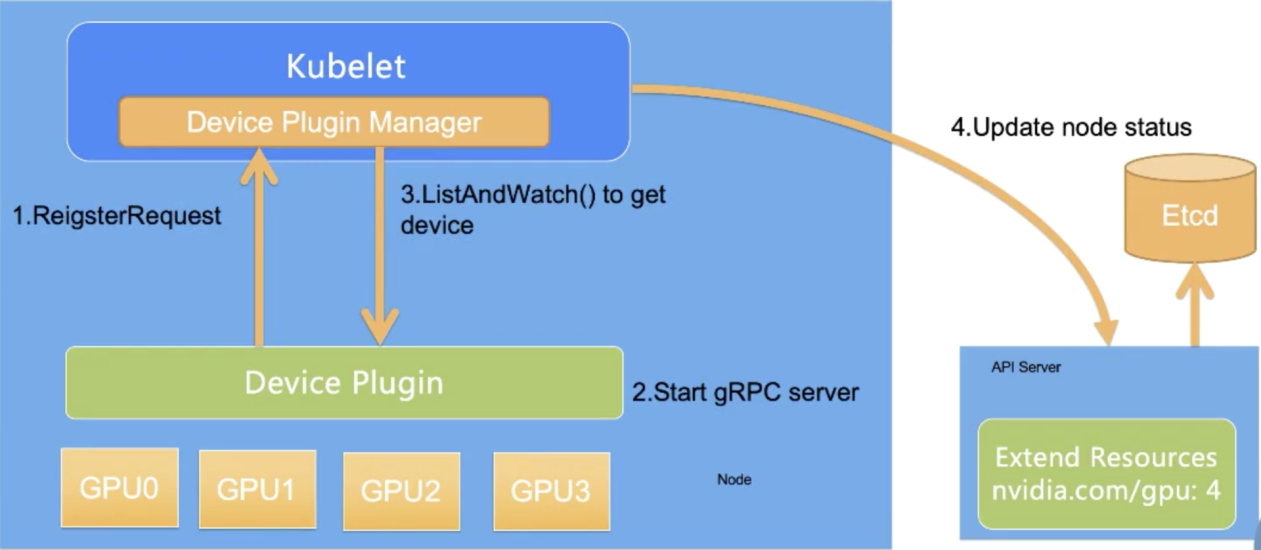

Report and Monitor Resources

Each hardware device is managed by the related device plugin, which is connected as a client to the device plugin manager of the kubelet through gRPC and reports to the kubelet the UNIX socket API version and device name to which it listens.

- Step 1: The device plugin is registered to interact with Kubernetes. Multiple devices may exist on a node. The device plugin, as a client, reports the following information to the kubelet:

- (1) name of the device managed by the device plugin, such as a GPU or RDMA,

- (2) file path of the UNIX socket to which the device plugin listens so that the kubelet can call the device plugin.

- Step 2: The device plugin starts a gRPC server. Then, the device plugin acts on behalf of the gRPC server to provide services to the kubelet. The listening address and API version are provided in Step 1.

- Step 3: After the gRPC server is started, the kubelet establishes a persistent connection to ListAndWatch of the device plugin to discover the device ID and check the device health. The device plugin notifies the kubelet when a device is unhealthy. If the unhealthy device is idle, the kubelet removes it from the schedulable device list. If the unhealthy device is used by a pod, the kubelet does not do anything because killing the pod is a high-risk action.

- Step 4: The kubelet exposes these devices to the status of the node and sends the device quantity to the Kubernetes API server. The scheduler implements scheduling based on this information.

The kubelet reports only the GPU quantity to the Kubernetes API server. The device plugin manager of the kubelet stores the GPU ID list and assigns the GPU IDs to devices. The Kubernetes global scheduler does not see the GPU ID list, only the GPU quantity.

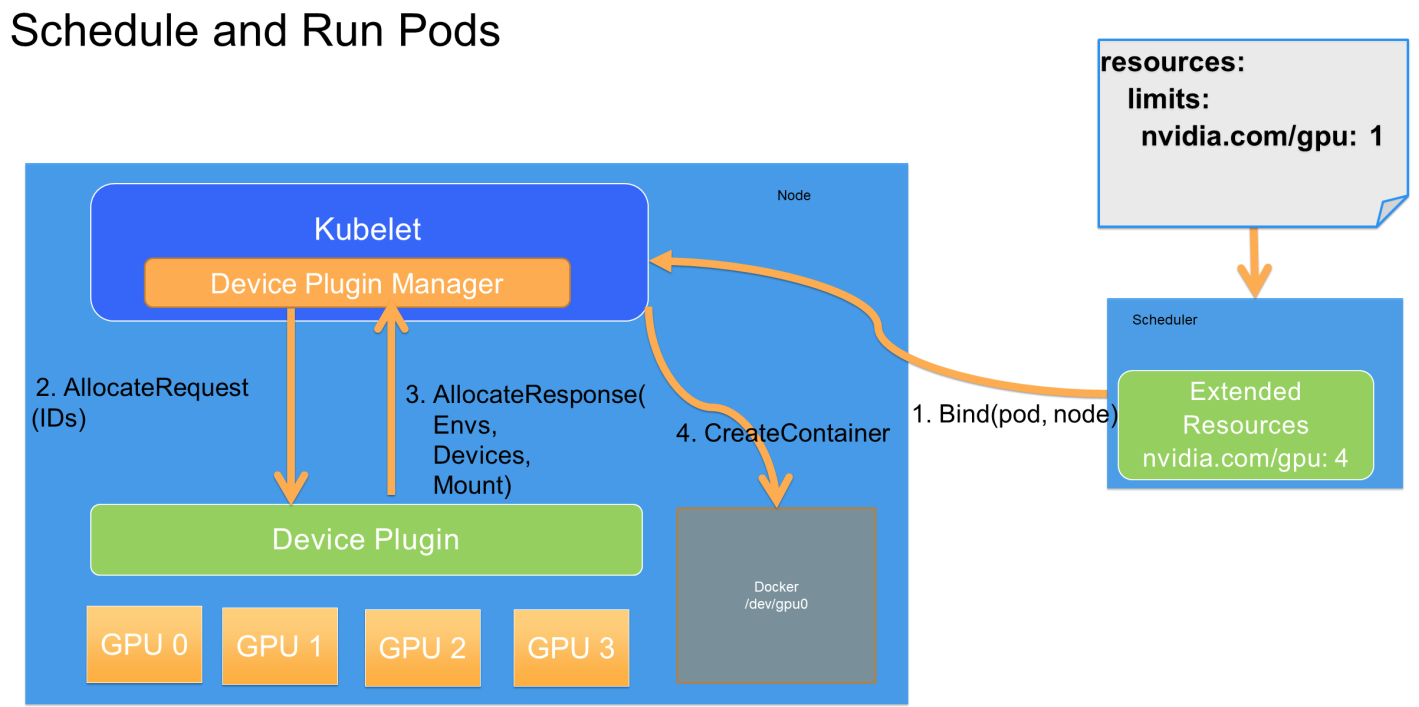

Resource Allocation

When a pod wants to use a GPU, it declares the GPU resource and required quantity in Resource.limits, such as nvidia.com/gpu: 1 in pod’s spec. Kubernetes finds the node that meets the required GPU quantity, subtracts the number of GPUs on the node by 1, and binds the pod and the node.

After the binding is complete, the node-matched kubelet creates a container. When the kubelet finds that the resource specified in the pod’s container request is a GPU, it enables the internal device plugin manager to select an available GPU from the GPU ID list and assigns the GPU to the container.

The kubelet sends an Allocate request to the device plugin. The request includes the device ID list that contains the GPU to be assigned to the container.

After receiving the Allocate request, the device plugin finds the device path, driver directory, and environment variables related to the device ID, and returns the information to the kubelet through an Allocate response.

The kubelet assigns a GPU to the container based on the received device path and driver directory. Then, Docker creates a container as instructed by the kubelet. The created container includes a GPU. Finally, the required driver directory is mounted. This completes the process of assigning a GPU to a pod in Kubernetes.