linux-numa

Overview

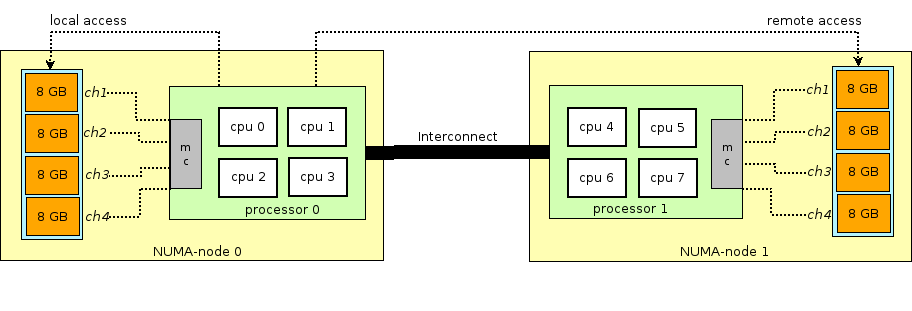

The NUMA-aware architecture is a hardware design which separates its cores into multiple clusters where each cluster has its own local memory region and still allows cores from one cluster to access all memory in the system. However, if a processor needs to use memory that is not its own memory region, it will take longer to access that (remote) memory. For applications where performance is crucial, preventing the need to access memory from other clusters is critical.

- A socket refers to the

physical location where a processor package plugs into a motherboard. The processor that plugs into the motherboard is also known as a socket. - A core is an individual execution unit within a processor that can independently execute a software execution thread and maintains its execution state separate from the execution state of any other cores within a processor.

- A thread refers to a hardware-based thread execution capability. For example, the Intel Xeon 7560 has eight cores, each of which has hardware that can effectively execute two software execution threads simultaneously, yielding 16 threads.

1 | $ lscpu |

From the hardware perspective, a NUMA system is a computer platform that comprises multiple components or assemblies each of which may contain 0 or more CPUs, local memory, and/or IO buses. we’ll call the components/assemblies ‘cells’ as well.

The cells of the NUMA system are connected together with some sort of system interconnect–e.g., a crossbar or point-to-point link are common types of NUMA system interconnects. Both of these types of interconnects can be aggregated to create NUMA platforms with cells at multiple distances from other cells.

Memory access time and effective memory bandwidth varies depending on how far away the cell containing the CPU or IO bus making the memory access is from the cell containing the target memory.

Linux divides the system’s hardware resources into multiple software abstractions called “nodes”. Linux maps the nodes onto the physical cells of the hardware platform, abstracting away some of the details for some architectures. As with physical cells, software nodes may contain 0 or more CPUs, memory and/or IO buses. And, again, memory accesses to memory on “closer” nodes–nodes that map to closer cells–will generally experience faster access times and higher effective bandwidth than accesses to more remote cells.

For each node with memory, Linux constructs an independent memory management subsystem, complete with its own free page lists, in-use page lists, usage statistics and locks to mediate access. In addition, Linux constructs for each memory zone [one or more of DMA, DMA32, NORMAL, HIGH_MEMORY, MOVABLE], an ordered “zonelist”. A zonelist specifies the zones/nodes to visit when a selected zone/node cannot satisfy the allocation request. This situation, when a zone has no available memory to satisfy a request, is called “overflow” or “fallback”.

By default, Linux will attempt to satisfy memory allocation requests from the node to which the CPU that executes the request is assigned. Specifically, Linux will attempt to allocate from the first node in the appropriate zonelist for the node where the request originates. This is called “local allocation.” If the “local” node cannot satisfy the request, the kernel will examine other nodes’ zones in the selected zonelist looking for the first zone in the list that can satisfy the request.

NUMA

CPU

each CPU is assigned its own local memory and can access memory from other CPUs in the system.

each processor contains many cores with a shared on-chip cache and an off-chip memory and has variable memory access costs across different parts of the memory within a server

Memory

In Non-Uniform Memory Access (NUMA), system memory is divided into zones (called nodes), which are allocated to particular CPUs or sockets. Access to memory that is local to a CPU is faster than memory connected to remote CPUs on that system.

Memory allocation policies defines for Numa system

Default(local allocation): This mode specifies that any nondefault thread memory policy be removed, so that the memory policy “falls back” to the system default policy. The system default policy is “local allocation”—that is,

allocate memory on the node of the CPU that triggered the allocation.nodemask must be specified as NULL.If the "local node" contains no free memory, the system will attempt to allocate memory from a "near by" node.Bind: This mode defines a strict policy that restricts memory allocation to the nodes specified in nodemask.

If nodemask specifies more than one node, page allocations will come from the node with the lowest numeric node ID first, until that node contains no free memory. Allocations will then come from the node with the next highest node ID specified in nodemask and so forth, until none of the specified nodes contain free memory. Pages will not be allocated from any node not specified in the nodemask.Interleave:

This mode interleaves page allocations across the nodes specified in nodemask in numeric node ID order. This optimizes for bandwidth instead of latency by spreading out pages and memory accesses to those pages across multiple nodes. However, accesses to a single page will still be limited to the memory bandwidth of a single node.Preferred: This mode sets the preferred node for allocation. The kernel will try to allocate pages from this node first and fall back to “near by” nodes if the preferred node is low on free memory.

If nodemask specifies more than one node ID, the first node in the mask will be selected as the preferred node.If the nodemask and maxnode arguments specify the empty set, then the policy specifies “local allocation” (like the system default policy discussed above).

Debug

Taskset

The taskset command is considered the most portable Linux way of setting or retrieving the CPU affinity (binding) of a running process (thread). it only sets cpu affinity of running process, not touch memory allocation.

1 | # start process on given cpu |

numactl

numactl can be used to control the NUMA policy for processes, shared memory, or both. One key thing about numactl is that, unlike taskset, you can’t use it to change the policy of a running application.

1 | $ yum install -y numactl |

--interleave=<nodes>policy has the application allocate memory in a round-robin fashion on “nodes.” With only two NUMA nodes, this means memory will be allocated first on node 0, followed by node 1, node 0, node 1, and so on. If the memory allocation cannot work on the current interleave target node (node x), it falls back to other nodes, but in the same round-robin fashion. You can control which nodes are used for memory interleaving or use them all--interleave=0,1 or --interleave=all--membind=<nodes>policy forces memory to be allocated from the list of provided nodes--membind=0 or --menbind=all--preferred=<node>policy causes memory allocation on the node you specify, but if it can’t, it will fall back to using memory from other nodes.--preferred=1--localallocpolicy forces allocation of memory on the current node--localalloc--cpunodebind=<nodes>option causes processes to run only on the CPUs of the specified node(s)--cpunodebind=0--physcpubind=<CPUs>policy executes the process(es) on the list of CPUs provided--physcpubind=+0-4,8-12

numastat

The numastat tool is provided by the numactl package, and displays memory statistics (such as allocation hits and misses) for processes and the operating system on a per-NUMA-node basis. The default tracking categories for the numastat command are outlined as follows:

numa_hit

The number of pagesthat were successfully allocated to this node.numa_miss

The

number of pages that were allocated on this node because of low memory on the intended node. Each numa_miss event has a corresponding numa_foreign event on another node.numa_foreign

The

number of pages initially intended for this node that were allocated to another node instead. Each numa_foreign event has a corresponding numa_miss event on another node.interleave_hit

The number of interleave policy pages successfully allocated to this node.

local_node

The number of pages successfully allocated on this node,

by a process on this node.other_node

The number of pages allocated on this node, by a process on another node.

numa_miss vs numa_foreign

- If a process running on Node 0 requests memory from

Node 1 (its preferred node), but the allocation is completed on Node 0, the numa_miss counter for Node 0 will increase, request memory from A but allocated on B, this is miss! - If a process running on Node 0 requests memory from Local Node 0, but the allocation is completed on Node 1, the numa_foreign counter for Node 0 will increase, request memory from Local but allocated on remote, this is foreign!

numa_foreign vs other_node

- numa_foreign is about

allocation preference(where memory was intended to be allocated) - other_node is about

process locality(where the process is running) - Different perspective!!!

Options

-mDisplays system-wide memory usage information on a per-node basis, similar to the information found in /proc/meminfo-p pattern|PIDDisplays per-node memory information for the specified pattern. If the value for pattern is comprised of digits, numastat assumes that it is a numerical process identifier-sSorts the displayed data in descending order so that the biggest memory consumers (according to the total column) are listed first-vDisplays more verbose information. Namely, process information for multiple processes will display detailed information for each process

1 | # system wide view of each numa node |

numad

numad is an automatic NUMA affinity management daemon. It monitors NUMA topology and resource usage within a system in order to dynamically improve NUMA resource allocation and management., it scans all processes of the system within period of time.

Note that when numad is enabled, its behavior overrides the default behavior of automatic NUMA balancing(scheduler)

1 | $ yum install numad |

lstopo

lstopo and lstopo-no-graphics are capable of displaying a topological map of the system in a variety of different output formats. The only difference between lstopo and lstopo-no-graphics is that graphical outputs are only supported by lstopo, to reduce dependencies on external libraries. hwloc-ls is identical to lstopo-no-graphics.

1 | $ lstopo-no-graphics |