virtualization-virtio

Overview

When talking about virtio-networking we can separate the discussion into two layers:

- Control plane - Used for capability exchange negotiation between the host and guest both for establishing and terminating the data plane.

- Data plane - Used for transferring the actual data (packets) between host and guest.

It’s important to distinguish between these layers since they have different requirements (such as performance) and different implementations

Fundamentally the data plane is required to be as efficient as possible for moving the packets fast while the control plane is required to be as flexible as possible for supporting different devices and vendors in future architectures.

virtio specification

virtio spec defines virtual device(like a device from vendor)

Virtio is an open specification for virtual machines’ data I/O communication, offering a straightforward, efficient, standard and extensible mechanism for virtual devices, rather than boutique per-environment or per-OS mechanisms. It uses the fact that the guest can share memory with the host for I/O to implement that.

The virtio specification is based on two elements: devices and drivers. In a typical implementation, the hypervisor(qemu) exposes the virtio devices to the guest through a number of transport methods. By design they look like physical devices to the guest within the virtual machine.

The most common transport method is PCI or PCIe bus. However, the device can be available at some predefined guest’s memory address (MMIO transport). These devices can be completely virtual with no physical counterpart or physical ones exposing a compatible interface.

The typical (and easiest) way to expose a virtio device is through a PCI port since we can leverage the fact that PCI is a mature and well supported protocol in QEMU and Linux drivers. Real PCI hardware exposes its configuration space using a specific physical memory address range (i.e., the driver can read or write the device’s registers by accessing that memory range) and/or special processor instructions. In the VM world, the hypervisor captures accesses to that memory range and performs device emulation, exposing the same memory layout that a real machine would have and offering the same responses. The virtio specification also defines the layout of its PCI Configuration space, so implementing it is straightforward.

When the guest boots and uses the PCI/PCIe auto discovering mechanism, the virtio devices identify themselves with with the PCI vendor ID and their PCI Device ID. The guest’s kernel uses these identifiers to know which driver must handle the device. In particular, the linux kernel already includes virtio drivers.

The virtio drivers must be able to allocate memory regions that both the hypervisor and the devices can access for reading and writing, i.e., via memory sharing. We call data plane the part of the data communication that uses these memory regions, and control plane the process of setting them up.

The virtio kernel drivers share a generic transport-specific interface (e.g: virtio-pci), used by the actual transport and device implementation (such as virtio-net, or virtio-scsi).

The virtio network device is a virtual ethernet card, and it supports multiqueue for TX/RX. Empty buffers are placed in N virtqueues for receiving packets, and outgoing packets are enqueued into another N virtqueues for transmission. Another virtqueue is used for driver-device communication outside of the data plane, like to control advanced filtering features, settings like the mac address, or the number of active queues. As a physical NIC, the virtio device supports features such as many offloadings, and can let the real host’s device do them.

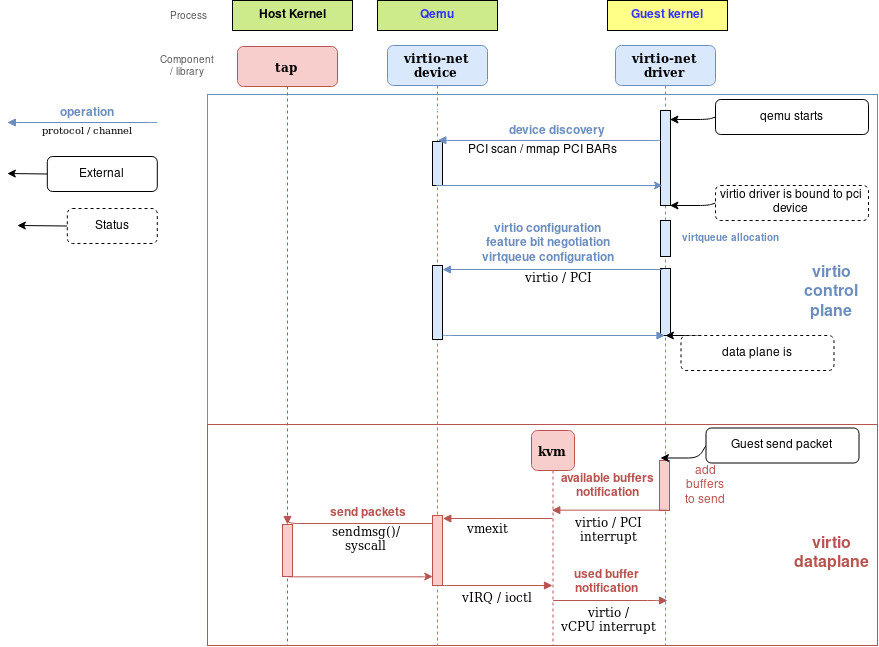

Virtio specification: virtqueues

Virtqueues are the mechanism for bulk data transport on virtio devices. Each device can have zero or more virtqueues (link). It consists of a queue of guest-allocated buffers that the host interacts with either by reading them or by writing to them. In addition, the virtio specification also defines bi-directional notifications:

- Available Buffer Notification: Used by the driver to signal there are buffers that are ready to be processed by the device

- Used Buffer Notification: Used by the device to signal that it has finished processing some buffers.

In the PCI case, the guest sends the available buffer notification by writing to a specific memory address, and the device (in this case, QEMU) uses a vCPU interrupt to send the used buffer notification.

The virtio specification also allows the notifications to be enabled or disabled dynamically. That way, devices and drivers can batch buffer notifications or even actively poll for new buffers in virtqueues (busy polling). This approach is better suited for high traffic rates.

In summary, the virtio driver interface exposes:

- Device’s feature bits (which device and guest have to negotiate)

- Status bits

- Configuration space (that contains device specific information, like MAC address)

- Notification system (configuration changed, buffer available, buffer used)

- Zero or more virtqueues

- Transport specific interface to the device

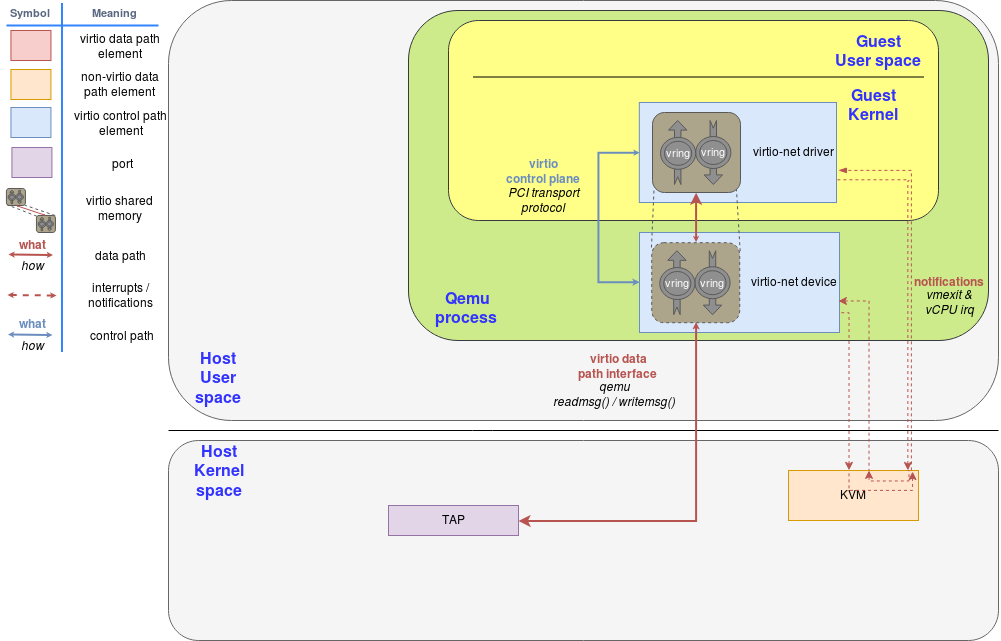

virtio in Qemu(virtio backend(dataplane) implement in Qemu)

Implement data plan in Qemu is the first(early) way, easy to understand, but low performance, not used in production env at all.

As backend in qemu, so no vhost protocol is used.

Sending data diagram

vhost

vhost protocol enables us to implement a data plane going directly from the kernel (host) to the guest bypassing the qemu process.

The vhost protocol itself only describes how to establish the data plane, however. Whoever implements it is also expected to implement the ring layout for describing the data buffers (both host and guest) and the actual send/receive packets.

The vhost API is a message based protocol that allows the hypervisor(it says qemu) to offload the data plane to another component (handler) that performs data forwarding more efficiently. Using this protocol, the master sends the following configuration information to the handler:

- The hypervisor’s memory layout. This way, the handler can locate the virtqueues and buffer within the hypervisor’s memory space.

- A pair of file descriptors that are used for the handler to send and receive the notifications defined in the virtio spec. These file descriptors are shared between the handler and KVM so they can communicate directly without requiring the hypervisor’s intervention. Note that this notifications can still be dynamically disabled per virtqueue.

After this process, the hypervisor(here it says qemu) will no longer process packets (read or write to/from the virtqueues). Instead, the dataplane will be completely offloaded to the handler, which can now access the virtqueues’ memory region directly as well as send and receive notifications directly to and from the guest.

The vhost messages can be exchanged in any host-local transport protocol, such as Unix sockets or character devices and the hypervisor can act as a server or as a client (in the context of the communication channel). The hypervisor is the leader of the protocol, the offloading device is a handler and any of them can send messages.

The handler can be in kernel (vhost-net) or in user application(vhost-user)

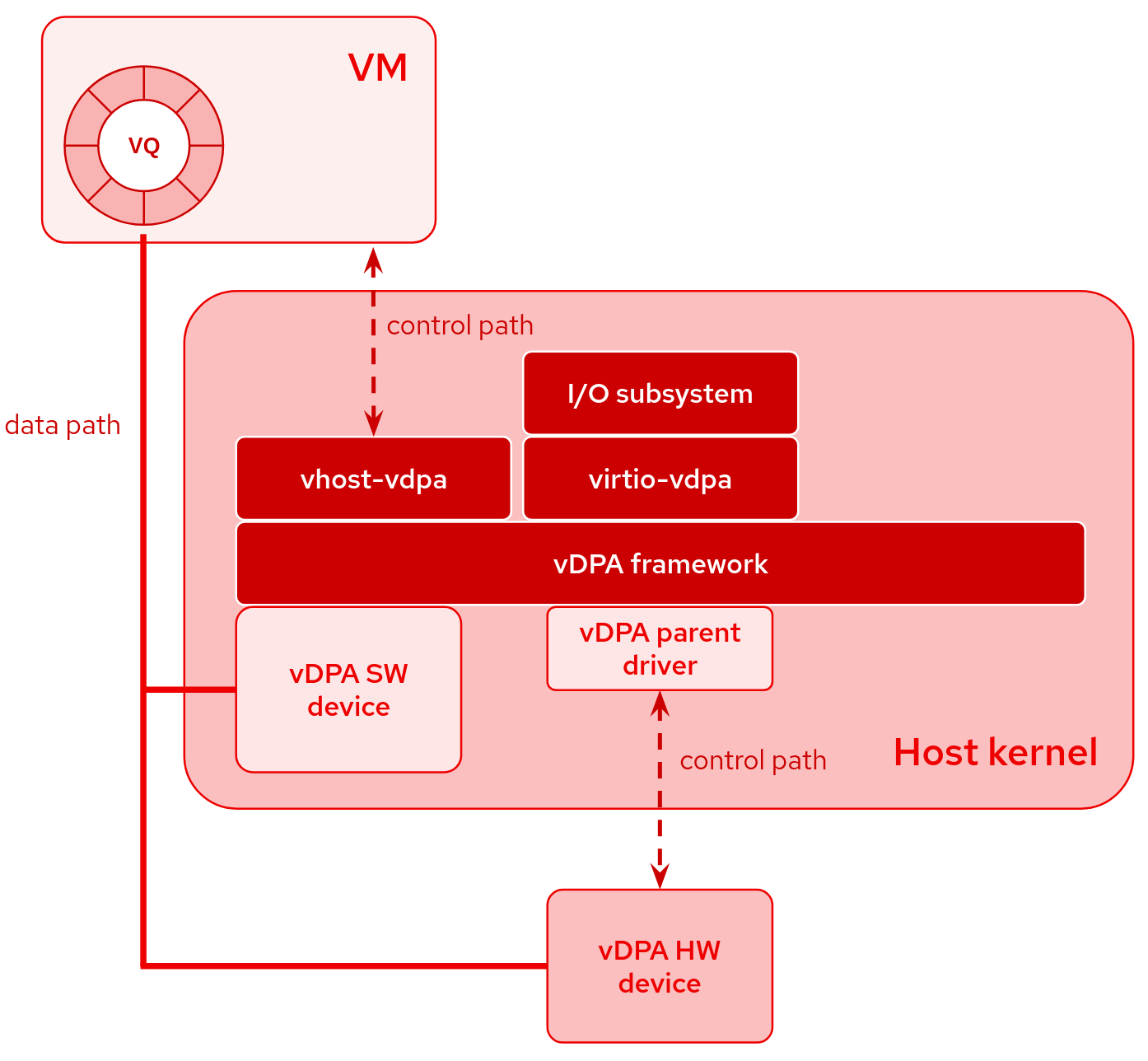

vDPA

Virtio Backend(data plane) in hardware

Virtio data path acceleration (vDPA) in essence is an approach to standardize the NIC SRIOV data plane using the virtio ring layout and placing a single standard virtio driver in the guest decoupled from any vendor implementation, while adding a generic control plane and SW infrastructure to support it.

with vDPA, Data plane goes directly from the NIC to the guest using the virtio ring layout(need IOMMU support from CPU). However each NIC vendor can now continue using its own driver (with a small vDPA add-on) and a generic vDPA driver is added to the kernel to translate the vendor NIC driver/control-plane to the virtio control plane.

A “vDPA device” means a type of device whose datapath complies with the virtio specification, but whose control path is vendor specific. like smartNIC(Mellox Bluefield). virtio dataplane implemented in hardware.

vDPA devices can be both physically located on the hardware or emulated by software