virtualization-IO

Introduction

Virtual machine uses host device by three different types.

- Full Emulated devices

- Paravirtualized devices

- Physically shared devices

As the first way has low performance for IO intensive VM, hence it should not be used in cloud provider, so let’s focus on the later two.

Paravirtualization provides a fast and efficient means of communication for guests to use devices on the host machine, The popular one is virtio.

Physically shared devices(Direct/IO, Passthrough) is more efficient than Paravirtualization for IO intensive VM, but it needs hardware IOMMU support.

Virtual Devices

Paravirtulization

Some paravirtualized devices decrease I/O latency and increase I/O throughput to near bare-metal levels, while other paravirtualized devices add functionality to virtual machines that is not otherwise available.

All virtio devices have two parts: the host device(backend) and the guest driver(frontend).

Front end driver for different type of virtual device

The paravirtualized network device (virtio-net)

The paravirtualized network device is a virtual network device that provides network access to virtual machines with increased I/O performance and lower latency.The paravirtualized block device (virtio-blk)

The paravirtualized block device is a high-performance virtual storage device that provides storage to virtual machines with increased I/O performance and lower latency. The paravirtualized block device is supported by the hypervisor and is attached to the virtual machine (except for floppy disk drives, which must be emulated).The paravirtualized controller device (virtio-scsi)

The paravirtualized SCSI controller device provides a more flexible and scalable alternative to virtio-blk. A virtio-scsi guest is capable of inheriting the feature set of the target device, and can handle hundreds of devices compared to virtio-blk, which can only handle 28 devices.The paravirtualized serial device (virtio-serial)

The paravirtualized serial device is a bytestream-oriented, character stream device, and provides a simple communication interface between the host’s user space and the guest’s user space.

Requires:

- Guest: Must install virtio-xx front end driver(most linux already install it)

- Host: Must install vhost-xxx backend driver in host(Should install for KVM enabled machine), Host also needs to install driver for physical device, but Guest never accesses it directly. but backend driver in KVM accesses it.

Passthrough

VFIO attaches PCI devices on the host system directly to virtual machines, providing guests with exclusive access to PCI devices for a range of tasks. This enables PCI devices to appear and behave as if they were physically attached to the guest virtual machine.

VFIO improves on previous PCI device assignment architecture by moving device assignment out of the KVM hypervisor, and enforcing device isolation at the kernel level.

With VFIO and IOMMU from hardware, Hypervisor can assign physical device to VM directly, like GPU to a VM, but in cloud environment, we usually we do not assign the whole physical device(GPU, network card, block device) to one VM directly, as we want to share physical device by different VMs, but still need isolation, this what SR-IOV does, SR-IOR is a feature provided by PIC-E device, it’s virtualization from hardware.

SR-IOV

SR-IOV (Single Root I/O Virtualization) is a PCI Express (PCI-e) standard that extends a single physical PCI function to share its PCI resources as separate virtual functions (VFs). Each function can be used by a different virtual machine via PCI device assignment. An SR-IOV-capable PCI-e device provides a Single Root function (for example, a single Ethernet port) and presents multiple, separate virtual devices as unique PCI device functions. Each virtual device may have its own unique PCI configuration space, memory-mapped registers, and individual MSI-based interrupts.

SR-IOV has two main functions:

Physical functions (PFs) which are a full PCI device including discovery, managing and configuring as normal PCI devices. There is a single PF pair per NIC and it provides the actual configuration for the full NIC device

Virtual functions (VFs) are simple PCI functions that only control part of the device and are derived from physical functions. Multiple VFs can reside on the same NIC.

There are two solutions for device pass through, the solution two wins if two solutions are available for the device, as for second solution, it needs virtio data plane supported in hardware.

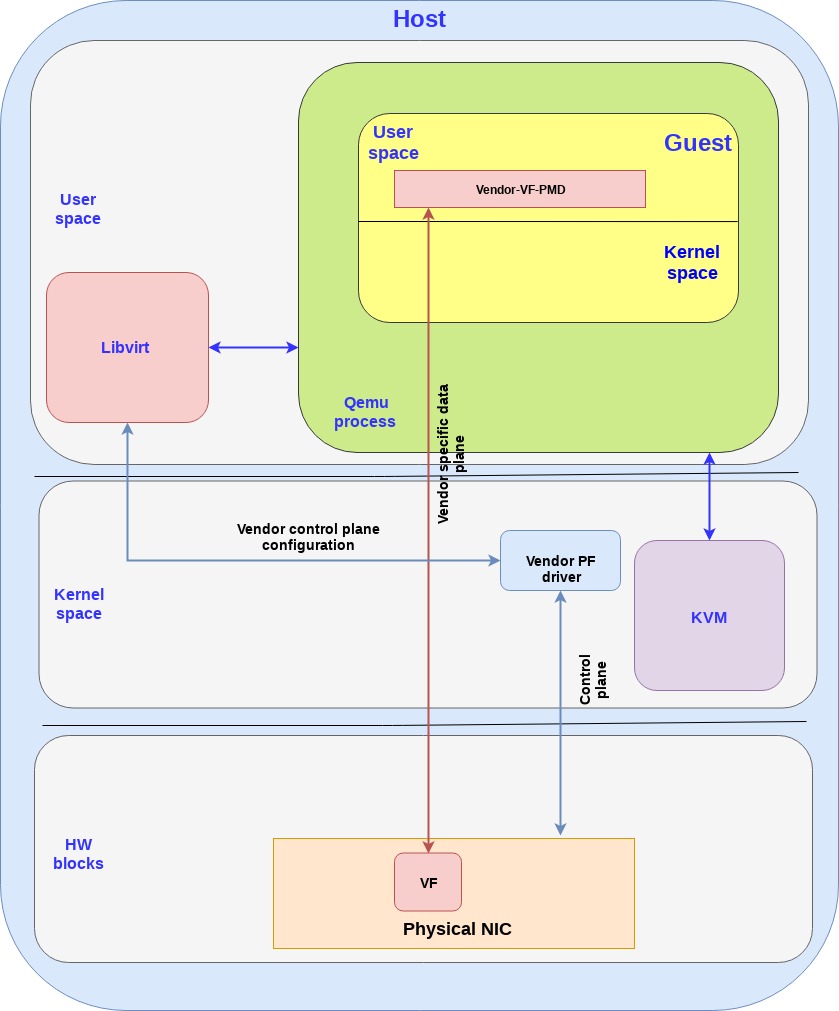

Solution one(vendoer specific driver in guest)

There are two cases for solution one: vendor specific driver in Guest os

Using the guest kernel driver: In this approach we use the NIC (vendor specific) driver in the kernel of the guest, while directly mapping the IO memory, so that the HW device can directly access the memory on the guest kernel.

Using the DPDK pmd driver in the guest: In this approach we use the NIC (vendor specific) DPDK pmd driver in the guest userspace, while directly mapping the IO memory, so that the HW device can directly access the memory on the specific userspace process in the guest.

Note

The data plane is vendor specific and goes directly to the VF.

For SRIOV, Vendor NIC specific drivers are required both in the host kernel (PF driver) and the guest userspace (VF driver) to enable this solution.

The host kernel driver(for PF) and the guest userspace VF driver don’t communicate directly. The PF/VF drivers are configured through other interfaces (e.g. the host PF driver can be configured by libvirt).

The vendor-VF in the guest is responsible for configuring the NICs VF while the vendor-PF-driver in the host kernel space is managing the full NIC.

Host: IOMMU support from hardware and must install VFIO driver for VF and vendor driver for PF.

Installed vendor driver in guest has many drawbacks

- It requires a match between the drivers running in the guest and the actual physical NIC.

- If the NIC firmware is upgraded, the guest application driver may need to be upgraded as well.

- If the NIC is replaced with a NIC from another vendor, the guest must use another driver the NIC.

- Migration of a VM can only be done to a host with the exact same configuration.

solution two

Use generic driver(virtio) in Guest OS, but this required virtio data plane supported from hardware(VF)

Can we still use virtio driver in guest os to operate VF directly?

Without hardware support, NO, as VF is vendor specific, different vendors use different formats for data transferring, it does not know data sent by virtio driver, so VF vendor in hardware must implement virtio spec(ring layout), so that for datapplane, it can know data sent with virtio format and send it on wire!!!, this is only for dataplane, in order to send, we need control plan for VF to prepare sending setting like set register etc, if we use virtio, there must be a guy in middle to convert virtio control to vendor specific, that’s virtio data path acceleration (vDPA) framework does, it’s only for control plane, In summary, offload dataplane to hardware, use vDPA framework for control plane.

Why not offload control plan to HW as well?

Control plan supports the virtio control spec including discovery, feature negotiation, establishing/terminating the data plane, and so on,it is more complicated and requires interactions with memory management units, hence it’s not offload to HW.

with vDPA, Data plane goes directly from the NIC to the guest using the virtio ring layout. a generic vDPA driver is added to the kernel which calls vendor specific driver(vendor vDPA driver) for virtio control plane.

Solution two requires

- Guest: virtio driver

- Host: IOMMU support from CPU, VFIO driver, virtio support in PCI-E hardware(smartNIC), vDPA generic framework and vDPA for vendor specific plugin, vendor driver(vDPA generic->vDPA vendor plugin->vendor driver)

Cons

- Live migration—Providing live migration between different vendor NICs and versions given the ring layout is now standard in the guest.

- The bare-metal vision—a single generic NIC driver—Forward looking, the virtio-net driver can be enabled as a bare-metal driver, while using the vDPA SW infrastructure in the kernel to enable a single generic NIC driver to drive different HW NICs (similar, e.g. to the NVMe driver for storage devices).