storage_nbd

Introduction

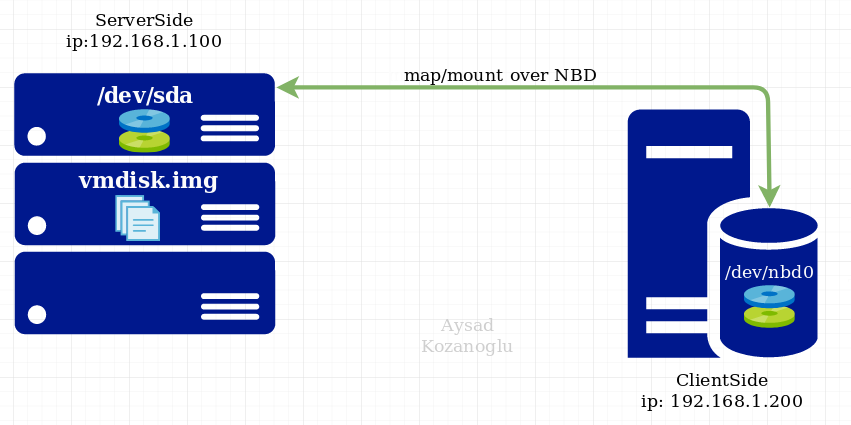

Network block devices (NBD) are used to access remote storage device that does not physically reside in the local machine, for each network block device, it’s mapped with (/dev/nbdx) to client as a local block device, you can do low level operation for this block device, like partition, format with filesystem that NFS can NOT do.

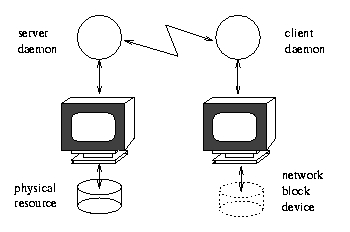

NBD works according to a client/server architecture. You use the server to make a volume available as a network block device from a host, then run the client to connect to it from another host.

All commands verified on Centos7

NBD uses TCP as its transport protocol. There is no well known port used for NBD

- Client accesses

/dev/nbdxafter nbd mounts - Client and Server perform negotiation

- Client sends a read request to the server(did by kernel) specifying the

start offset and the length of the datato be read. - Server replies with a read reply, containing an error code (if any); if the error code is zero, reply header will be followed by immediate data

- Client sends a write request, specifying the start offset and the length of the data to be written, immediately followed by raw data.

- Server writes data out and sends a write reply, which contains an error code that may specify if an error occurred. If no error did occur, data is assumed to have been written to disk.

- Client sends a disconnect request

- Server disconnects.

Nbd example

In order to use nbd, need to install nbd server at server machine and nbd client at client side(also install nbd kernel module which exports nbd device for user, so that user sees it as a local block device).

NBD allows to export a real device or virtual disk at server, then client can mount it by NBD protocol.

Verified at Centos 7.6

1 | # centos |

export and mount a real device from remote

1 | # server side, export a local real device by: /etc/nbd-server/config, create this file if not found |

export and mount two disk files from remote

1 | # create a virtual disk |

mount a virtual disk image qcow2

1 | # edit /etc/nbd-server/config, create if not found |