k8s_network_cni

Introduction

CNI (Container Network Interface), a Cloud Native Computing Foundation project, consists of a specification and libraries for writing plugins to configure network interfaces in Linux containers, along with a number of supported plugins. CNI concerns itself only with network connectivity of containers and removing allocated resources when the container is deleted. Because of this focus, CNI has a wide range of support and the specification is simple to implement.

A CNI plugin is responsible for inserting a network interface into the container network namespace(e.g., one end of a virtual ethernet (veth) pair) and making any necessary changes on the host (e.g., attaching the other end of the veth into a bridge). It then assigns an IP address to the interface and sets up the routes consistent with the IP Address Management section by invoking the appropriate IP Address Management (IPAM) plugin.

Main tasks

- 🔴insert interface in container

- 🔴assign ip address to container

- 🔴setup routes or iptables rules

Plugins provided by CNI projects

interface

- bridge:create a bridge and add container to it

- loopback:create a loopback

- ptp: create veth pair

- vlan: create a vlan dev

IPAM:IP assignment

- host-local:create and maintain a local ip database

Meta:

- flannel:create flannel dev and set route for cross node communication

- tuning:adjust the seeting for a dev

- portmap:map hostport to container port using iptables.

Plugin

Kubernets network model

Kubernetes imposes the following fundamental requirements on any networking implementation for pods on the same node

- pods on a node can communicate with all pods on all nodes without NAT

- agents on a node (e.g. system daemons, kubelet) can communicate with all pods on that node

But it does NOT impose pods comunication between nodes, this is done by Network plugins which mostly implement CNI, so that kubernetes calls CNI standard API to config its network.

CNI plugins

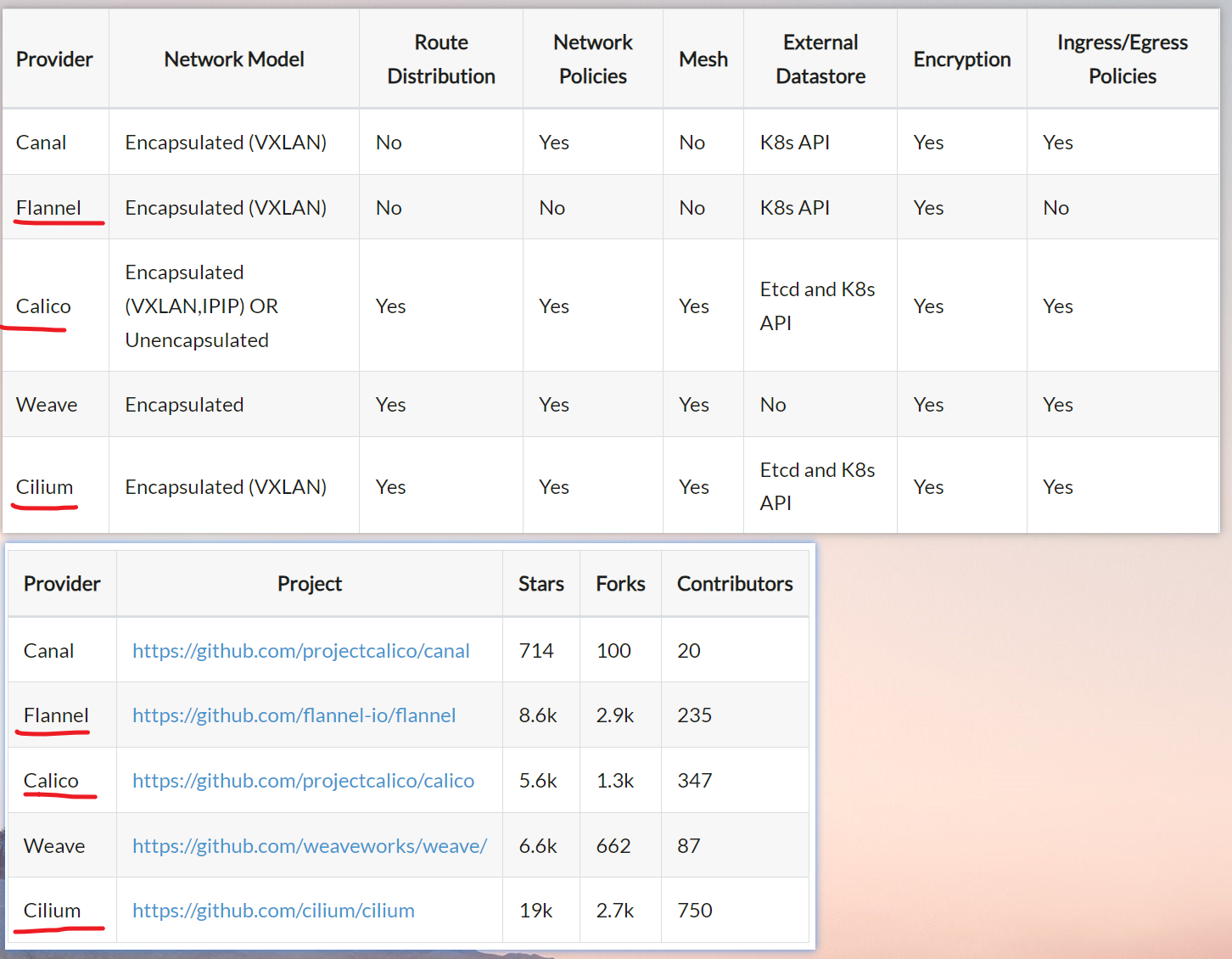

Calico: Calico is an open source networking and network security solution forcontainers, virtual machines, and native host-based workloadsCilium: Cilium is open source software for providing and transparentlysecuring network connectivity between application containers. Cilium is L7/HTTP aware and can enforce network policies on L3-L7 using an identity based security model that is decoupled from network addressing, and it can be used in combination with other CNI pluginsFlannel: Flannel is a verysimple overlay networkthat satisfies the Kubernetes requirements- OVN: OVN is an opensource network virtualization solution developed by the Open vSwitch community. It lets one create logical switches, logical routers, stateful ACLs, load-balancers etc to build different virtual networking topologies.

- ACI: Cisco Application Centric Infrastructure offers an integrated overlay and underlay SDN solution that supports containers, virtual machines, and bare metal servers

- Antrea: It leverages Open vSwitch as the networking data plane.

- AWS VPC CNI for Kubernetes

- Azure CNI for Kubernetes

- Google Compute Engine (GCE)

👍How CNI plugin is called

- When the container runtime expects to perform network operations on a container, it (like the kubelet in the case of K8s) calls the CNI plugin with the desired command(ADD, DEL etc)

- The container runtime also provides related network configuration and container-specific data to the plugin.

- The CNI plugin performs the required operations and reports the result.

Flannel

The CNI plugin is selected by passing Kubelet the --network-plugin=cni command-line option. Kubelet reads a file from --cni-conf-dir (default /etc/cni/net.d) and uses the CNI configuration from that file to set up each pod’s network. The CNI configuration file must match the CNI specification, and any required CNI plugins referenced by the configuration must be present in --cni-bin-dir (default /opt/cni/bin).

CNI Related Files

- Kubelet has

--network-plugin=cnicommand-line option - cni conf file at

--cni-conf-dir (default /etc/cni/net.d) - cni plugin(binary) at

--cni-bin-dir (default /opt/cni/bin). - IPAM of host-local for used IP address at

/var/lib/cni/networks/cbr0